Quantum computing has a branding problem. Not because it’s fake (it’s very real), but because it’s the only field where you can truthfully say,

“We made the error rate go down,” and the internet hears, “Cool, so you can break the internet tomorrow.”

Scientific honesty is the antidote to that gap. And right now, quantum computing needs it more than everbecause the biggest progress in years

(error correction finally working “the way the theory promised”) is arriving at the exact moment the next, subtler theoretical hurdle comes into focus:

once you push ordinary errors down, the rare, correlated, messy, real-world errors become the boss level.

Why “Scientific Honesty” Is Not Just a VirtueIt’s Infrastructure

In most technologies, exaggeration is annoying. In quantum computing, exaggeration is actively dangerous. Why? Because the work is expensive,

the timelines are long, the math is counterintuitive, and a small “demo” can look like a full victory lap to non-specialists.

Scientific honesty doesn’t mean pessimism. It means:

- Stating what was achieved (and what wasn’t).

- Publishing the failure modes, not just the headline metric.

- Using benchmarks that resist hype and can be reproduced or independently sanity-checked.

- Separating “engineering progress” from “cryptography panic.”

This matters because quantum computing isn’t one problemit’s a stack: physics, fabrication, control systems, compilers, algorithms,

and (quietly, relentlessly) error correction. If one layer is misrepresented, everyone above it inherits the confusion.

The Big Breakthrough That Created a New Problem

The “classic” barrier in quantum computing has been noise. Qubits are fragile, and computation is a long chain of operations where one bad moment

can poison the whole result. The clean theoretical promise has always been: if the physical error rate is low enough, then quantum error correction

can suppress logical errors exponentially as you scale. That turning point is often described as going “below threshold.”

Below Threshold: What It Means (Without the Mystic Fog)

The idea is simple: you encode one logical qubit using many physical qubits. Then you constantly measure

“check” information to detect errors without directly peeking at the stored quantum state. A major approach is the surface code,

prized because it works with local, neighbor-to-neighbor connectionssomething real chips can do.

Here’s the key subtlety: making an error-correcting code bigger also creates more places for errors to happen. If your physical qubits are too noisy,

scaling makes things worse. If they’re good enough, scaling makes things betterdramatically better. That “flip” is the threshold concept in action.

Willow and the “Yes, This Is Real” Moment

In late 2024, Google introduced its Willow chip and described a milestone the field has chased for decades: as they increased the size of encoded

structures, they saw error rates drop in the way error-correction theory predicts. Their public write-ups emphasize that this is about scaling behavior:

adding qubits to the code can reduce errors rather than inflate them.

That’s huge. It’s also where honesty becomes essential, because “error correction works” is not the same as “a useful, general-purpose quantum computer

is right around the corner.”

Quantum Computing’s Latest Theoretical Hurdle: The Tyranny of Rare, Correlated Errors

Once average errors are suppressed, the system’s limiting factor shifts. The new hurdle is not “noise” in the generic sense.

It’s structured noise: correlated events, leakage, drift, and other non-ideal behaviors that don’t match the neat assumptions

of simplified error models.

From Average Error Rates to Tail Risk

Many familiar metrics describe “typical” behavior: average gate fidelity, average error per cycle, and so on.

But fault-tolerant quantum computing is a marathon. If you want to run millionsor billionsof operations, the outcome is often determined by

the tail of the error distribution: the rare events that happen once in a great while, but wreck everything when they do.

One reason recent results are so informative is that they don’t just celebrate improvementthey probe what starts to dominate once performance gets good.

That’s where the “latest theoretical hurdle” appears: even when you’re below threshold, rare correlated events can become the ceiling.

In practical terms, the problem shifts from “Can we suppress errors?” to “Can we identify, model, and eliminate the weird ones?”

Correlated Noise: The Part the Simplest Theorems Don’t Hand You for Free

The threshold theorem is a triumph, but it comes with assumptions. Real hardware doesn’t always deliver independent, identical, memoryless errors.

Noise can have spatial correlations (neighboring qubits share a bad day), temporal correlations (your system remembers yesterday), or both.

There is deep theory extending fault tolerance to more complex noise settings, but the conditions are stricterand the engineering is harder.

This is the honesty point: “Below threshold” is not a magic spell. It’s a statement about scaling under specific assumptions and regimes.

The closer you get to serious computation, the more the details of the noise model matter.

Leakage: When a Qubit Quietly Stops Being the Qubit You Thought It Was

Some platforms have “leakage” errors, where the system escapes the intended two-level qubit space into other energy levels. Leakage is sneaky:

it can look like random error until you notice it has patterns, persistence, and correlation. The practical implication is brutal:

you may need new protocols, new decoding logic, and even hardware-level design changes to keep leakage from becoming the limiting factor.

The Overhead Reality Check: Logical Qubits Are Expensive on Purpose

Scientific honesty also means saying the quiet part out loud: fault tolerance costs a lot. A logical qubit isn’t “one better qubit.”

It’s a whole organization of qubits, checks, and classical computation working together.

Why Non-Clifford Gates Make Everyone Sweat

Error correction is not just about storing information; it’s about computing on it. Many schemes make some operations relatively “easy”

and others painfully expensive. In many architectures, the costly ingredient is implementing certain gates needed for universal computation.

The overhead often shows up as extra space (more qubits) and extra time (more cycles).

One widely discussed example is how resource-heavy some cryptographic attacks would be even if you had a theoretical algorithm.

The point isn’t “quantum will never do it.” The point is: the distance between a lab demo and a cryptographically relevant machine is measured in engineering orders of magnitude.

Roadmaps HelpIf We Read Them Like Adults

Industrial roadmaps can be useful, as long as we treat them as plans (not prophecies). For example, IBM has publicly described a path to a fault-tolerant

system called Starling, including milestones that explicitly mention magic-state techniques and a target scale measured in logical qubits and gate counts.

That specificity is a form of honesty: it anchors ambition to technical requirements.

The Hidden Hero: Classical Computing (Yes, Really)

Fault tolerance is “quantum + classical” all the way down. Every cycle of error correction produces information that must be decoded quickly enough

to keep the computation on track. That means the quantum chip is only half the machine; the other half is the classical control and decoding stack.

Real-Time Decoding Is a Bottleneck You Can’t Hand-Wave Away

If the decoder is too slow, the quantum hardware can’t wait politelyit keeps evolving, accumulating errors. So teams increasingly treat real-time

decoding as a first-class design constraint. This is also a place where progress can look unglamorous (“We optimized a decoder on commodity hardware!”)

but actually matters a lot for scaling.

Honesty in the Age of Viral Quantum Headlines

The most responsible quantum announcements include their own brakes. A great example is how major teams explicitly separate “benchmark speedups”

from “cryptographically relevant quantum computers.” That’s not downplaying success; it’s preserving meaning.

Cryptography: The Claim That Needs the Most Care

“Quantum breaks encryption” is the zombie headline that never dies. The honest version is:

large, fault-tolerant quantum computers could threaten some widely used public-key schemes, but current devices are far from that scale,

and the security community is actively migrating to post-quantum cryptography.

If you’re looking for scientific honesty in action, it shows up as:

- Clear statements about current qubit counts versus the scale needed for cryptanalysis.

- Public encouragement to adopt post-quantum cryptography now, because migration takes time.

- Specific timelines and transition goals rather than vague “soon.”

So What Should We Measure (If We Want the Truth)?

If quantum computing is going to be communicated honestly, the field needs metrics that:

(1) correspond to real capability, (2) scale with engineering reality, and (3) can’t be gamed by clever benchmarking.

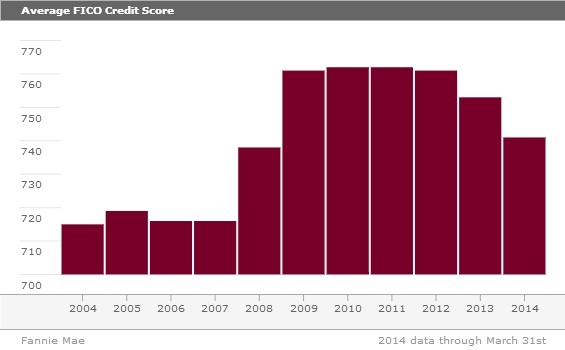

Better Metrics Than “Number of Qubits”

- Logical error rate per cycle (and how it scales with code distance).

- Break-even: when error correction improves lifetime or performance rather than harming it.

- Decoder latency relative to cycle time.

- Logical gate depth achieved reliably, not just once on a good day.

- Resource estimates for meaningful workloads (chemistry, materials, optimization), including overhead.

Notice what’s missing: vibes. Vibes are not a metric. (They are, however, extremely popular on social media.)

Where the Field Is Actually Headed

The honest outlook is exciting: we’re seeing multiple approaches to making error correction less expensive and more practical.

Some aim to build architectures that suppress certain errors intrinsically (so the code has less work to do).

Others focus on new codes, better decoders, modular designs, and tighter integration between quantum and classical layers.

Architectures That Start With Error Correction, Not as an Afterthought

One trend is designing hardware around error correction from day one, rather than bolting it on later. The goal is to reduce overhead:

fewer physical qubits per logical qubit, fewer cycles, and fewer “gotcha” error modes like leakage and long-range correlations.

Small Fault-Tolerant Primitives: The “Hello World” of the Future

Another sign of maturity is the demonstration of fault-tolerant building blockstiny pieces of computation carried out in ways that respect

the constraints of error correction. These aren’t flashy applications yet, but they’re the foundation that applications will sit on.

Field Notes: Experiences Around Scientific Honesty and the New Hurdle (≈)

If you want to understand scientific honesty in quantum computing, don’t picture a single dramatic “Eureka!” moment. Picture a calendar.

Now imagine that calendar filled with meetings titled “Why did the decoder freak out?” and “Is that spike real or just Tuesday?”

That’s the texture of the work.

A common experience for researchers is discovering that a system can look stable for hoursuntil it doesn’t. In early stages, teams often fight

obvious issues: noisy gates, unstable calibration, or cross-talk that shows up immediately. Progress looks like steady improvement in average metrics.

Then, as those averages get better, an odd pattern emerges: a rare event appears that doesn’t fit the usual model. It might happen once an hour,

once a day, or only when the lab’s HVAC system decides to become “creative.” The kicker is that these events can dominate outcomes in long runs.

That’s the moment many teams realize the goal isn’t merely “lower errors,” but “understand errors.”

This is where honesty becomes practical rather than philosophical. In lab culture, being honest often means resisting the temptation to delete

inconvenient data points as “outliers.” Sometimes they really are outliers. Sometimes they’re the whole story.

It’s also why careful teams obsess over repeatability: run the experiment again, vary the schedule, change the control pulses, shift the temperature,

swap the decoder settings. The boring repetition is not bureaucracy; it’s how you learn whether the system’s failures are random noise or a clue.

In industry settings, honesty takes a different shape: roadmaps and milestones. Engineers and researchers may be under pressure to simplify,

but the best roadmaps don’t just promise “more qubits.” They specify logical qubits, error correction techniques, and gate counts, because those are the

numbers that reveal whether progress is compounding or just accumulating hardware.

Internally, teams often talk in a language that never appears in headlines: decoder latency, leakage rates, correlated error bursts, calibration drift.

That internal language is where real maturity lives.

On the communication side, scientific honesty is often a balancing act. Researchers want to share genuine breakthroughs, but they also know a single phrase

(“exponential error suppression”) can be misunderstood as “we’re done.” Many communicators have learned to include a “disclaimer with dignity”:

not a hand-wavy caveat, but a clear statement of what is still missingmore code distance, more reliable two-qubit gates, better decoders,

and a deeper understanding of rare correlated failures.

The most encouraging experience, repeated across the field, is that honesty doesn’t slow progressit accelerates it.

When teams publish limitations, other teams aim directly at those limitations. That’s how a breakthrough becomes a platform.

In quantum computing today, the newest hurdle isn’t a reason to sigh. It’s a sign that the field has advanced far enough to see the next wall clearly

and that’s exactly the moment when honesty stops being optional and starts being the engine.

Conclusion: The Honest Future Is Still a Big Future

Quantum computing is not “hype” and it is not “magic.” It’s a hard engineering program built on deep theory. The best news of the last year is that

error correction is increasingly behaving the way it’s supposed to. The most important news is what that reveals:

once ordinary errors are suppressed, the battle moves to correlated noise, leakage, tail events, and the heavy overhead of universal fault tolerance.

Scientific honesty is how we keep the story aligned with realityso we can invest intelligently, secure systems responsibly,

and measure progress in ways that can’t be faked by a good day in the lab.